# Evaluation

We propose an homogeneous evaluation of the submitted solutions to learn the airfoil design task using the LIPS (Learning Industrial Physical Systems Benchmark suite) platform. The evaluation is performed through 3 categories that cover several aspects of augmented physical simulations namely:

- ML-related: standard ML metrics (e.g. MAE, RMSE, etc.) and speed-up with respect to the reference solution computational time;

- Physical compliance: respect of underlying physical laws (e.g. Navier-Stokes equations);

- Application-based context: out-of-distribution (OOD) generalization to extrapolate over minimal variations of the problem depending on the application; speed-up; In the ideal case, one would expect a solution to perform equally well in all categories but there is no guarantee of that. In particular, even though a solution may perform well in standard machine-learning related evaluation, it is required to assess whether the solution also properly respects the underlying physics.

For each category, specific criteria related to the airfoils design task are defined. The global score is calculated based on a linear combination formula of the three evaluation criteria categories scores:

We explain in the following how to calculate each of the three sub-scores for each category.

#

This sub-score is calculated based on a linear combination form of 2 sub-criteria, namely: Accuracy and SpeedUp.

For each quantity of interest, the accuracy sub-score is calculated based on two thresholds that are calibrated to indicate if the metric evaluated on the given quantity gives unacceptable/acceptable/great result. It corresponds to a score of 0 point / 1 point / 2 points, respectively. Within the sub-cateogry, Let :

•

•

•

Let also

A perfect score is obtained if all the given quantities provide great results. Indeed, we would have

For the speed-up criteria, we calibrate the score using the

•

•

•

•

In particular, there is no advantage in providing a solution whose speed exceeds

Note that, while only the inference time appears explicitly in the score computation, it does not mean the training time is of no concern to us. In particular, if the training time overcomes a given threshold, the proposed solution will be rejected. Thus, it would be equivalent to a null global score.

#

This sub-score will evaluate the capability of the learned model to predict OOD dataset. In the OOD testset, the input data are from a different distribution than those used for training. The computation of this sub-score is similar to

#

For the Physics compliance sub-score, we evaluate the relative errors of physical variables. For each criterion, the score is also calibrated based on 2 thresholds and gives 0/1/2 points, similarly to

# Practical example

Using the notation introduced in the previous subsection, let us consider the following configuration:

•

•

•

•

•

•

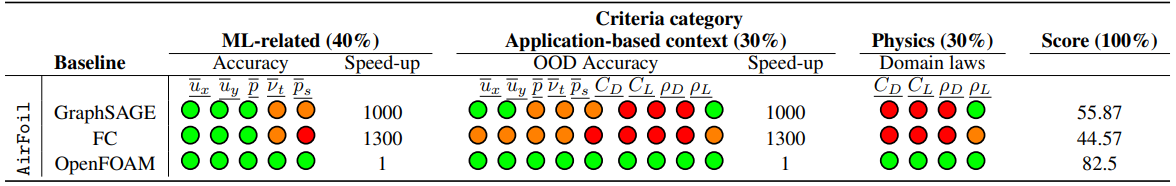

In order to illustrate even further how the score computation works, we provide in Table 2 examples for the airfoil task.

As it is the most straightforward to compute, we start with the global score for the solution obtained with 'OpenFOAM', the physical solver used to produce the data. It is the reference physical solver, which implies that the accuracy is perfect but the speed-up is only equal to 1 (no acceleration). Therefore, we obtain the following subscores:

•

•

•

Then, by combining them, the global score is

The procedure is similar with 'FC'; the associated subscores are:

•

•

•

Then, by combining them, the global score is

Table 1: Scoring Table for the 3 tasks under 3 categories of evaluation criteria for the considered configuration. The performances are reported using three colors computed on the basis of two thresholds. Colors meaning: ![]() Unacceptable

Unacceptable![]() Acceptable

Acceptable ![]() Great.

Great.